[System Design Tech Case Study Pulse #26] Processing 2 Billion Daily Queries : How Facebook Graph Search Actually Works

With detailed explanation and flow chart....

Hi All,

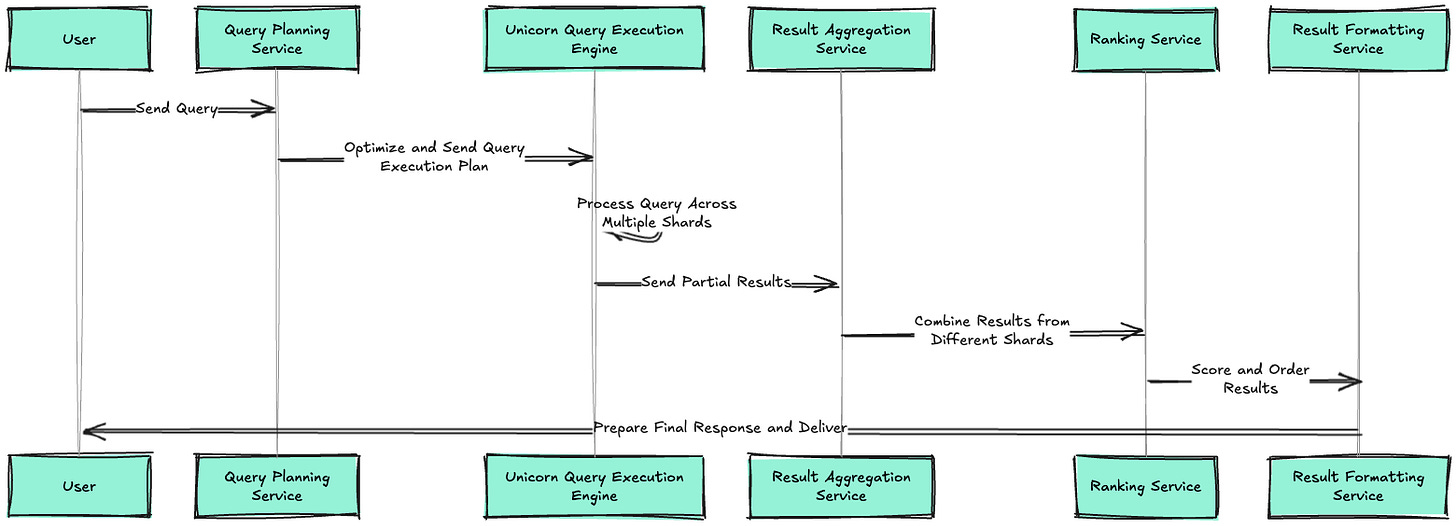

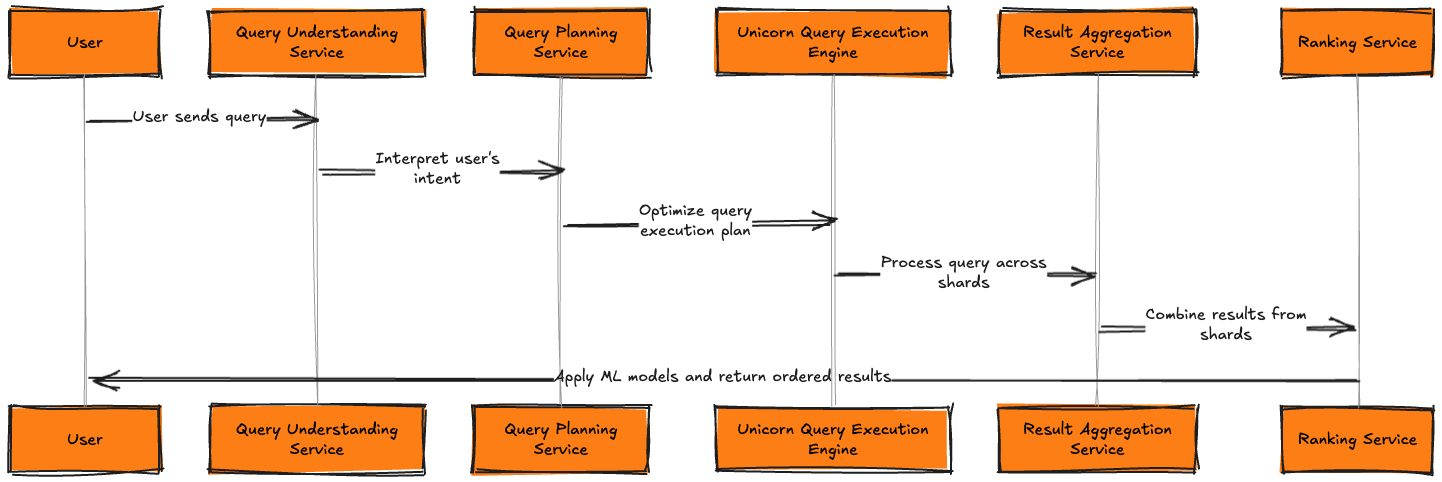

Facebook's Graph Search is capable of processing 2 billion daily queries using Unicorn, a custom-built inverted index and retrieval system, and Apache Thrift for efficient inter-service communication. This sophisticated system forms the backbone of Facebook's ability to provide real-time, personalized search results across its vast social graph.

Let me dive deep into how this system works, exploring the key components, technologies, and processes that enable such massive-scale, low-latency graph search capabilities.

Learn how to Design Facebook Newsfeed

System Overview

Before we delve into the search architecture, let us look at some key metrics of Facebook's Graph Search system:

- Daily search queries: 2 billion+

- Peak queries per second: 100,000+

- Indexed entities: Trillions (users, posts, pages, etc.)

- Edge types in the graph: 100,000+

- Average query latency: < 100ms

- Unicorn servers: 1000+

- Data centers: 10+

- Daily index updates: Billions

- Query types supported: Keyword, structured, natural language

- Languages supported: 100+

- System availability: 99.99%

- Index size: Petabytes of data

- Thrift RPC calls per query: 50+ on average

How Real World Scalable Systems are Build — 200+ System Design Case Studies:

System Design Den : Must Know System Design Case Studies

[System Design Tech Case Study Pulse #12] 8+ Billion Daily Views: How Facebook’s Live Video Ranking Algorithm Processes Daily Views Using Storm and Memcache

[System Design Tech Case Study Pulse #18] Tinder 1.5 Billion Swipes per Day : How Tinder Real Time Matching Actually Works

[System Design Tech Case Study Pulse #17] How Discord’s Real-Time Chat Scales to 200+ Million Users

[Tuesday Engineering Bytes] How Netflix handles millions of memberships efficiently?

[Friday Engineering Bytes] The Billion-Dollar Question — What’s My ETA? How Uber Calculates ETA…

[Saturday Engineering Bytes] What happens Once You Press Play button on Netflix..

[Monday Engineering Bytes] FAANG level — How to Write Production Ready Code ?

[Friday Engineering Bytes] At Amazon How 310 Million Users Experience Lightning-Fast Load Times

[Tuesday Engineering Bytes] How PayPal Manages Over 400 Million Active Accounts Seamlessly?

How it works —

1. User submits a search query through the Facebook App or Website.