PCA is a mathematical method that transforms high-dimensional data into a lower-dimensional form while preserving as much of the original data's variance as possible. It achieves this by finding a new set of axes, called principal components, along which the data exhibits the most significant variation.

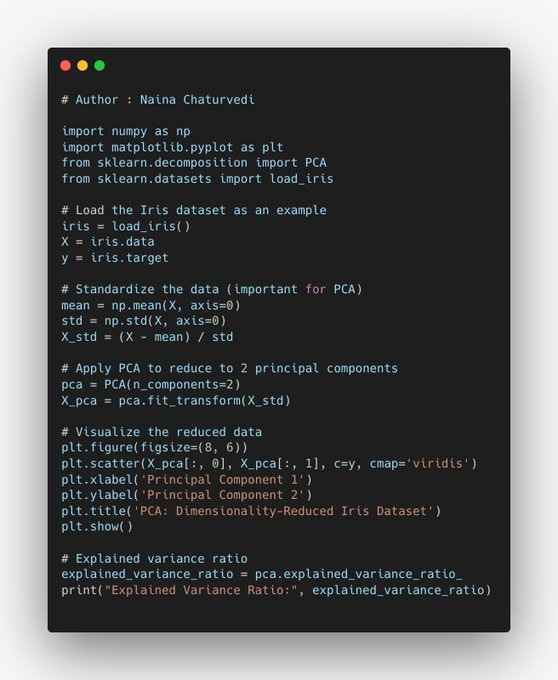

1/ PCA is a linear transformation method that aims to reduce dimensionality of dataset while preserving as much of original variance. It does this by identifying a new set of uncorrelated variables, called principal components, that are linear combinations of original features.

WHY you should take ONLY these courses to sky rocket your Data Science and ML Journey

Read about System Design 101 : Distributed Message Queue

Mega Launch - Youtube Channel and Highly Recommended Courses to become Brilliant Data Scientist/ ML Engineer

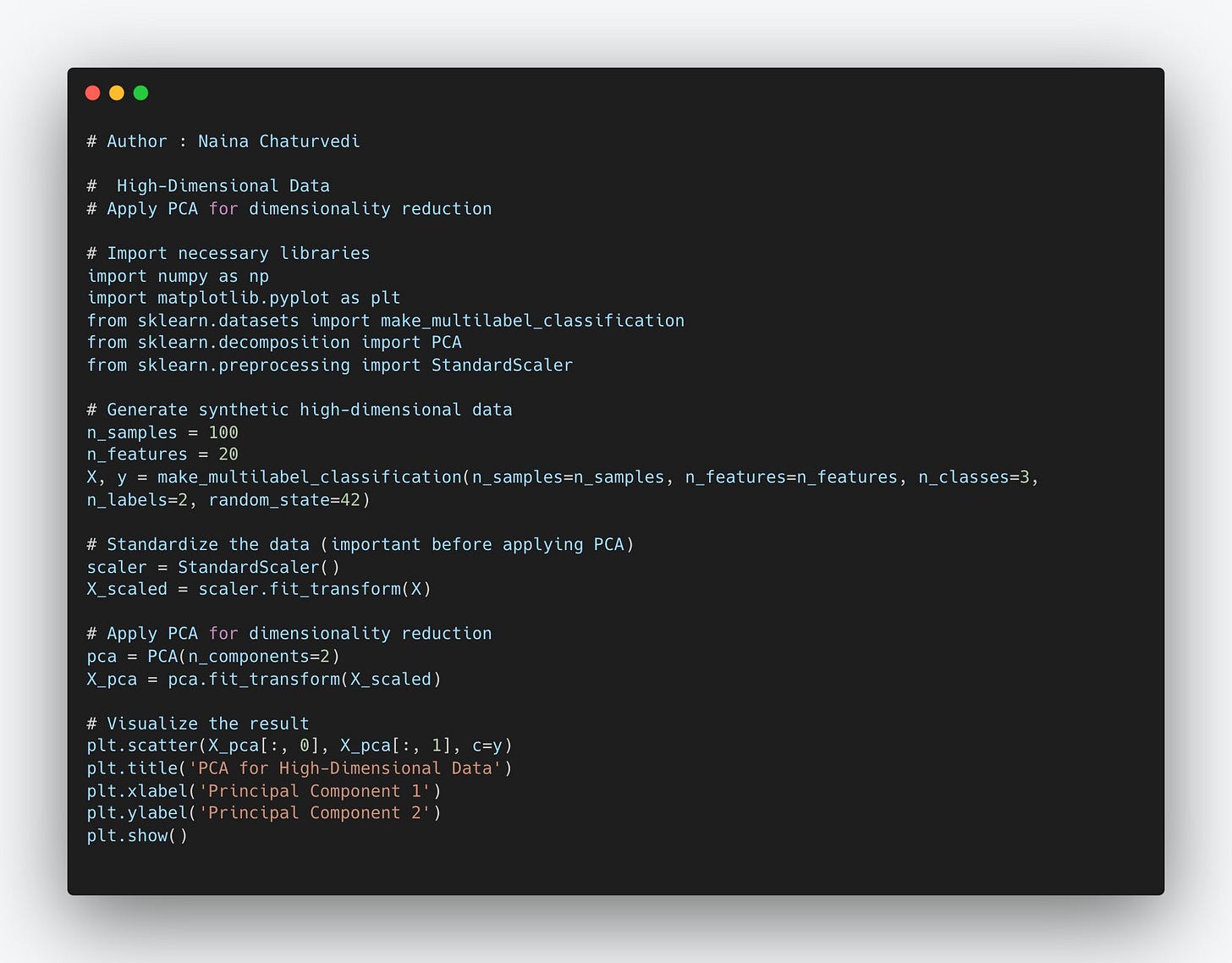

2/ When to use PCA - High-Dimensional Data: PCA is especially useful when dealing with high-dimensional datasets, where the number of features (dimensions) is relatively large compared to the number of data points. High dimensionality can lead to various issues.

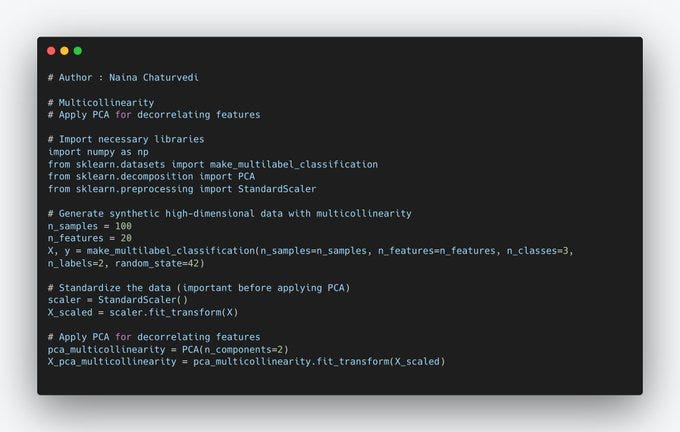

3/ Multicollinearity: If your dataset contains highly correlated features (multicollinearity), PCA can help by transforming them into a set of orthogonal (uncorrelated) principal components. This can improve model stability and interpretability.

4/ Data Compression: PCA can be used for data compression, particularly when storage space or bandwidth is limited. By reducing the dimensionality, you can represent data more efficiently while retaining most of its essential characteristics.

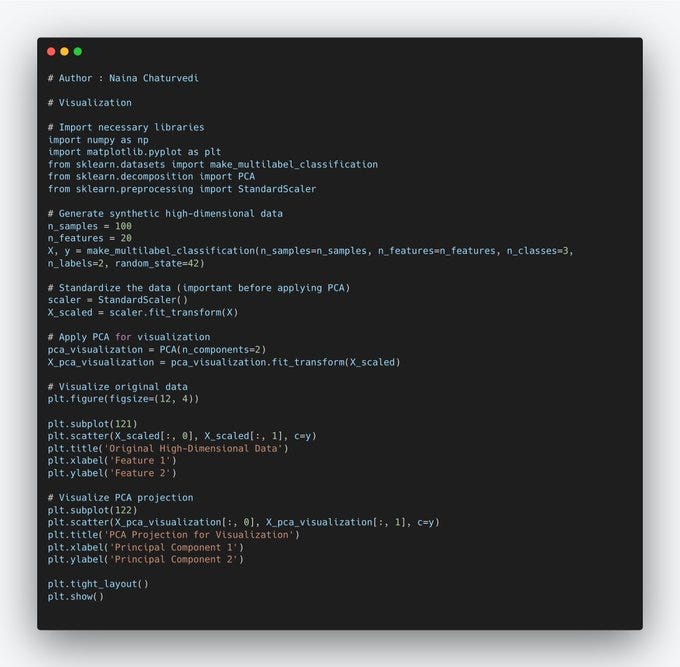

5/ Visualization: When you want to visualize high-dimensional data in two or three dimensions, PCA can be used to project the data onto a lower-dimensional space while preserving as much variance as possible. This makes it easier to explore and understand the data.

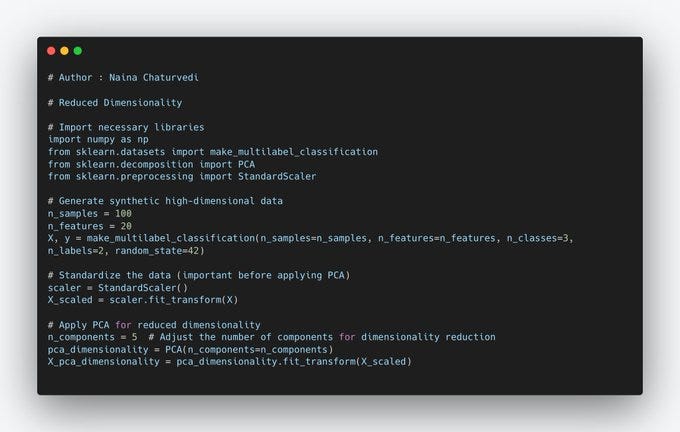

6/ Why to Use PCA: Reduced Dimensionality: PCA simplifies the dataset by reducing the number of dimensions. This simplification can lead to faster training and testing of machine learning models, especially when the original feature space is large.

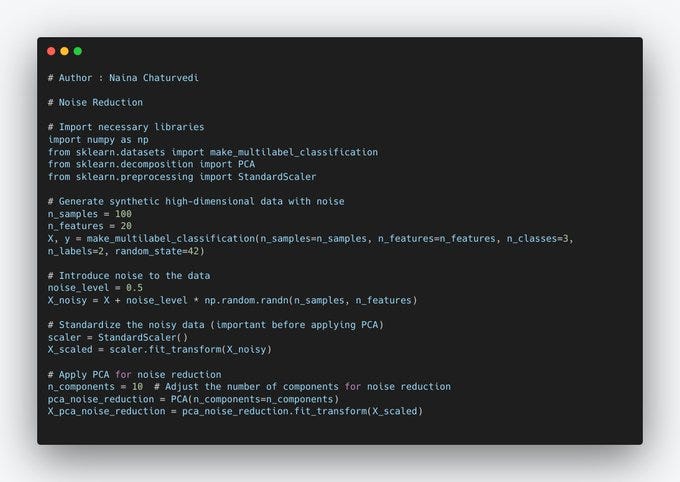

7/ Noise Reduction: High-dimensional data often contains noise or irrelevant information. PCA can help filter out this noise by focusing on the principal components, which capture the most important patterns in the data.

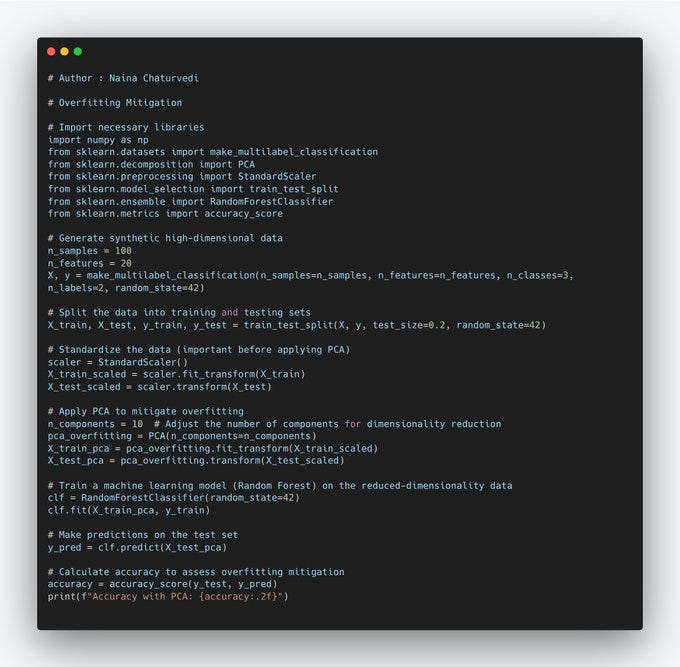

8/ Overfitting Mitigation: PCA reduces the risk of overfitting by decreasing the number of features. Overfitting occurs when a model learns to fit the noise in the data, and by reducing dimensionality, there is less noise to fit.

9/ PCA is a statistical method used for reducing the dimensionality of data while retaining its essential information. It achieves this by identifying the most significant patterns (principal components) in the data and representing it in a lower-dimensional space.

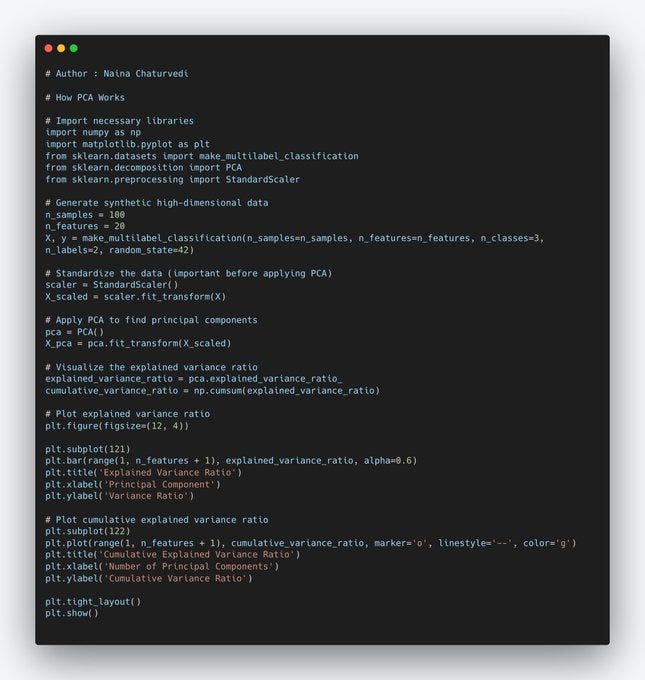

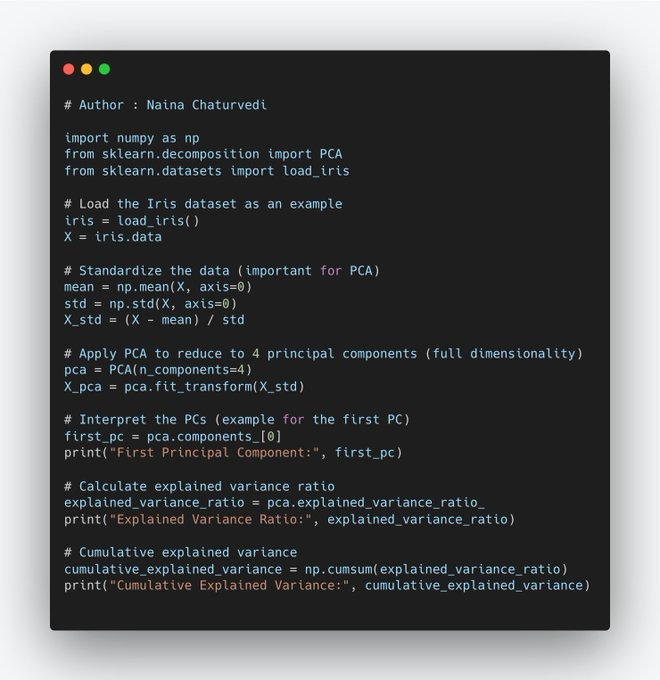

10/ How PCA Works: PCA finds linear combinations of the original features to create new variables (principal components) that maximize the variance in the data. The first principal component captures the most variance, the second captures the second most, and so on.

11/ By selecting a subset of these principal components, you can represent the data with reduced dimensions.

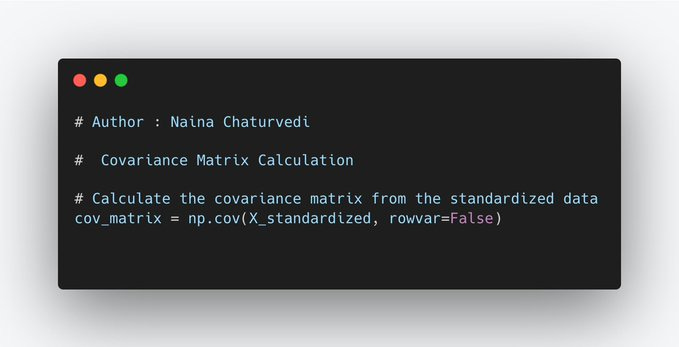

12/ Covariance Matrix: PCA begins by computing the covariance matrix of the original data. The covariance between two features measures how they change together. A positive covariance indicates that as one feature increases, the other tends to increase as well, and vice versa.

13/Eigenvalues and Eigenvectors: After obtaining the covariance matrix, PCA calculates its eigenvalues and corresponding eigenvectors. Eigenvectors are directions in the original feature space, and eigenvalues represent the variance of data along those directions.

14/ The eigenvector with the highest eigenvalue is the first principal component, the second highest eigenvalue corresponds to the second principal component, and so on. Eigenvalues indicate how much variance is explained by each principal component.

15/ PCA transforms the original features into a new set of orthogonal components (principal components) that capture the maximum variance in the data.

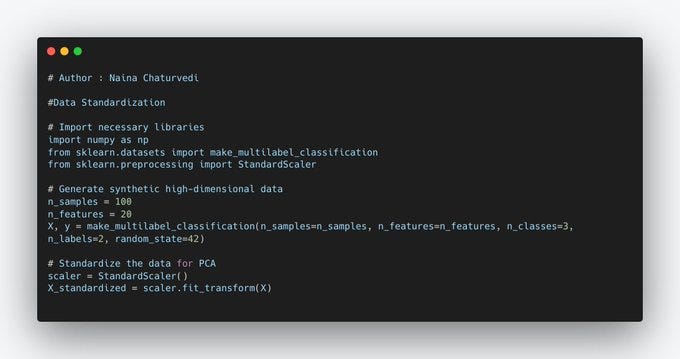

16/ Data Standardization: PCA typically starts with standardizing the data, ensuring that each feature has a mean of 0 and a standard deviation of 1. This step is crucial for PCA to work correctly because it assumes that the data is centered.

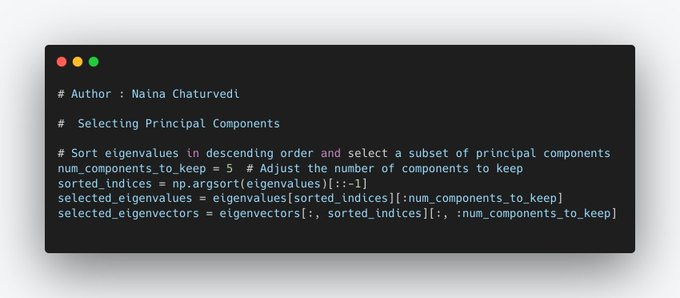

17/ Selecting Principal Components: PCA sorts the eigenvalues in descending order. The eigenvector corresponding to the largest eigenvalue becomes the first principal component, the second-largest eigenvalue corresponds to the second principal component, and so on.

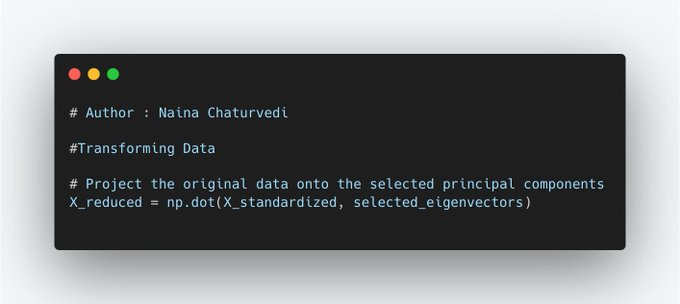

18/ Transforming Data: Finally, the original data is projected onto the selected principal components to create a new dataset with reduced dimensions. This projection is done by taking the dot product of the data and the principal component matrix.

19/ PCA involves a trade-off between reducing dimensionality and retaining information. Retaining more PCs preserves more information but may result in higher-dimensional data. Reducing the number of retained PCs simplifies the data but may result in information loss.

20/ Choosing the Right Number of Principal Components: Choosing the optimal number of principal components (PCs) to retain in PCA is a crucial decision, as it balances dimensionality reduction with information retention.

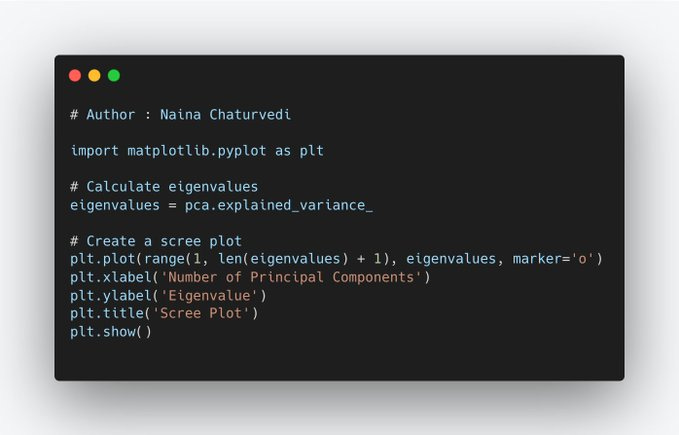

21/ A scree plot is a graphical representation of the eigenvalues (variance) of each principal component, sorted in descending order. Look for an "elbow" point in scree plot, where eigenvalues start to level off. The number of PCs just before this point is a reasonable choice.

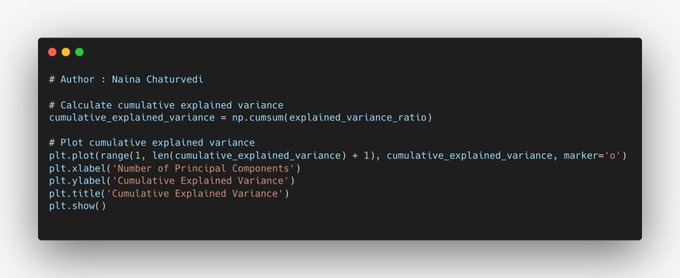

22/ The cumulative explained variance represents the total variance explained by a given number of PCs. How to Use: Calculate the cumulative explained variance and choose the number of PCs that capture a sufficiently high proportion of the total variance (e.g., 95%).

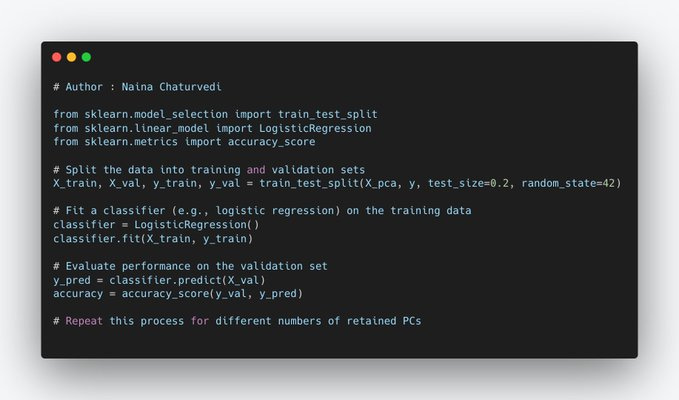

23/ Cross-validation techniques can be used to assess model performance for different numbers of retained PCs. Split the data into training and validation sets, fit a PCA model with a specific number of PCs on training set, and evaluate its performance on the validation set.

Must know concepts before you dive in the research papers ( Explained) —

Transformer Link

TransformerXL Link

VGG Link

Mask RCNN Link

Masked Autoencoder Link

BEiT Link

BERT Link

ColD Fusion Link

ConvMixer Link

Deep and Cross Network Link

DenseNet Link

DistilBERT Link

DiT Link

DocFormer Link

Donut Link

EfficientNet Link

ELMo Link

Entity Embeddings Link

ERNIE-Layout Link

FastBERT Link

Fast RCNN Link

Faster RCNN Link

MobileBERT Link

MobileNetV1 Link

MobileNetV2 Link

MobileNetV3 Link

RCNN Link

ResNet Link

ResNext Link

SentenceBERT Link

Single Shot MultiBox Detector (SSD) Link

StructuralLM Link

Swin Transformer Link

TableNet Link

TabTransformer Link

Tabular ResNet Link

TinyBERT Link

Vision Transformer Link

Wide and Deep Learning Link

Xception Link

XLNet Link

AlexNet Link

BART Link

InceptionNetV2 and InceptionNetV3 Link

InceptionNetV4 and InceptionResNet Link

Layout LM Link

Layout LM v2 Link

Layout LM v3 Link

Lenet Link

LiLT Link

Feature Pyramid Network Link

Feature Tokenizer Transformer Link

Focal Loss (RetinaNet) Link

Projects

Implemented Data Science and ML projects : https://bit.ly/3H0ufl7

Implemented Data Analytics projects : https://bit.ly/3QSy2p2

Complete ML Research Papers Summarized : https://bit.ly/3QVb5kY

Implemented Deep Learning Projects : https://bit.ly/3GQ56Ju

Implemented Machine Learning Ops Projects : https://bit.ly/3HkVYy1

Implemented Time Series Analysis and Forecasting Projects : https://bit.ly/3HhJ82D

Implemented Applied Machine Learning Projects : https://bit.ly/3GX1SEm

Implemented Tensorflow and Keras Projects : https://bit.ly/3JmGw6f

Implemented PyTorch Projects : https://bit.ly/3WuwbYu

Implemented Scikit Learn Projects : https://bit.ly/3Wn9KV7

Implemented Big Data Projects : https://bit.ly/3kwF7zv

Implemented Cloud Machine Learning Projects : https://bit.ly/3ktKaka

Implemented Neural Networks Projects : https://bit.ly/3J4Js71

Implemented OpenCV Projects : https://bit.ly/3iNKcmF

Implemented Data Visualization Projects : https://bit.ly/3XDpm8a

Implemented Data Mining Projects : https://bit.ly/3kqViy5

Implemented Data Engineering Projects : https://bit.ly/3WHFXqF

Implemented Natural Leaning Processing Projects : https://bit.ly/3Hj1Is6