Efficiently Fine Tuning ML Models - Explained in Simple terms with Implementation Details

With code, techniques and best tips...

Hi All,

Fine-tuning machine learning models refers to the process of taking a pre-trained model and further training it on a specific task or dataset to improve its performance for that particular task. In this post we will cover fine tuning ML models in detail.

WHY you should take ONLY these courses to sky rocket your Data Science and ML Journey

Read about System Design 101 : Distributed Message Queue

Mega Launch - Youtube Channel and Highly Recommended Courses to become Brilliant Data Scientist/ ML Engineer

In this post we will cover —

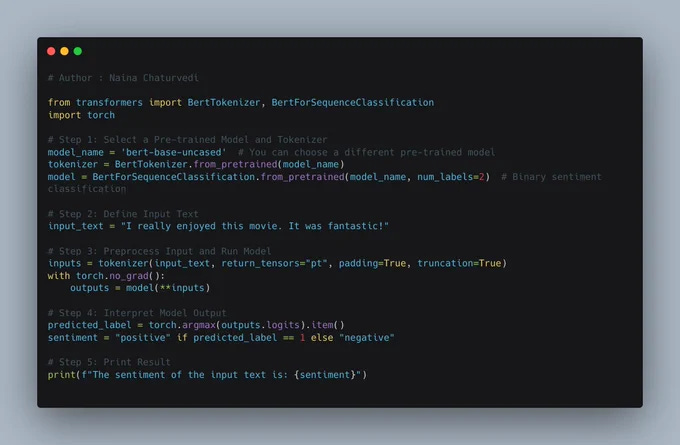

Select a Pre-trained Model:

Choose a pre-trained model relevant to your task and dataset.

Pre-trained models like BERT, GPT, ResNet offer useful learned features.

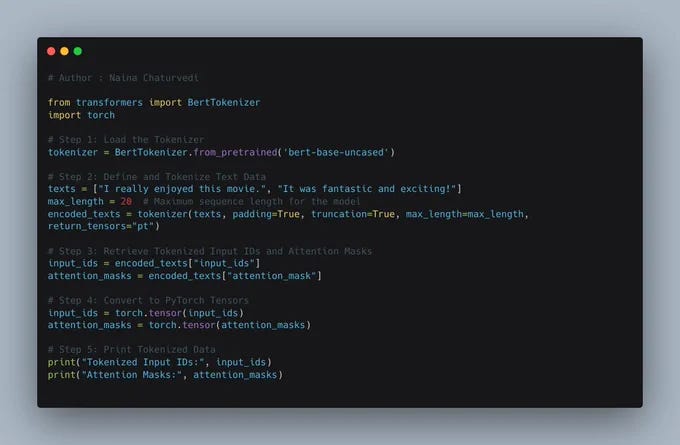

Prepare the Data:

Format and preprocess data for compatibility with the pre-trained model.

Tokenization, data augmentation, and other preprocessing steps may be needed.

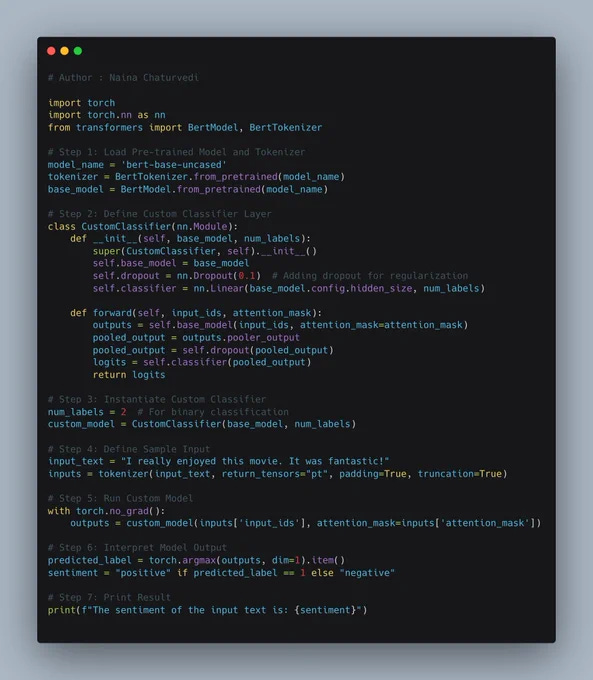

Modify the Model Architecture:

Adjust pre-trained model architecture to adapt it to your task.

Add task-specific layers on top of pre-trained layers.

Define Loss and Metrics:

Choose a suitable loss function and evaluation metrics.

These guide the optimization process during fine-tuning.

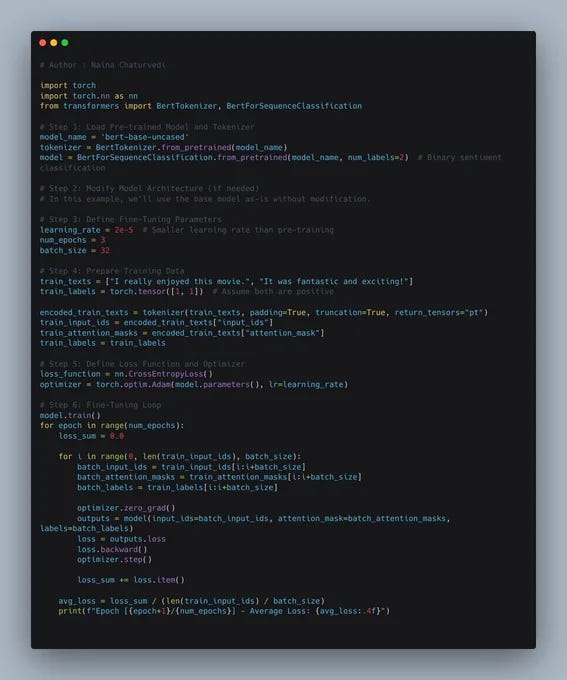

Fine-tune the Model:

Train the modified model on your specific dataset.

Use a smaller learning rate to avoid overwriting pre-trained knowledge.

Regularization and Hyperparameter Tuning:

Apply dropout, batch normalization, and L2 regularization to prevent overfitting.

Experiment with hyperparameters like learning rate, batch size, and optimizers.

Monitor and Validate:

Observe model's performance on validation dataset.

Use techniques like early stopping to prevent overfitting.

Evaluate and Iterate:

Assess model on a separate test dataset.

Iterate and adjust fine-tuning based on evaluation results.

Learning Rate Scheduling:

Adjust learning rate during training to aid convergence.

Techniques like learning rate annealing, step decay, or adaptive algorithms like Adam can help.

Early Stopping:

Monitor model's performance on a validation set.

Stop training when performance degrades to avoid overfitting.

Regularization Techniques:

Apply dropout, L1 or L2 regularization, and batch normalization.

Prevent overfitting and enhance model's generalization.

Data Augmentation:

Generate variations of training data with transformations.

Enhance model's robustness and generalization.

Transfer Learning:

Fine-tune specific layers of pre-trained model.

Adapt model to your task without erasing pre-trained knowledge.

Gradient Clipping:

Limit gradient values to prevent unstable training.

Hyperparameter Tuning:

Experiment with batch size, optimizer, regularization strength, network architecture.

Ensemble Methods:

Combine multiple models' predictions using bagging, boosting, stacking.

Let’s get started —

What is Fine Tuning in ML models and why to use it?

Imagine you have a super smart robot friend. This robot friend can look at things and tell you what they are. But, it's not perfect at first and might make a few mistakes. So, you decide to teach it better.

You show your robot friend lots of pictures with labels. For example, you show it pictures of dogs and tell it, "This is a dog." You also show it pictures of cats and cars, and you tell it what those are too.

Now, your robot friend has learned a little bit about these things, but it's still not perfect. Sometimes it might still mix up a cat and a dog.

Here comes the tricky part. You want your robot friend to get even better and stop mixing up things. So, you give it more pictures and labels, and this time, you tell it when it's right or wrong. When it makes a mistake like calling a cat a dog, you say, "Oops, that's not a dog, that's a cat."

The robot friend listens and starts fixing its mistakes. It adjusts itself to become better at knowing what's a dog, what's a cat, and what's a car. This process of making your robot friend better by pointing out its mistakes and showing it more examples is a bit like fine-tuning.

After doing this many times, your robot friend becomes really good at telling things apart. It now knows cats from dogs and cars from trucks much better than before. And that's how we fine-tune a machine learning model to make it smarter!

Fine-tuning in machine learning refers to the process of taking a pre-trained model, which has been trained on a large dataset, and further training it on a smaller, task-specific dataset. This process allows the model to adapt its learned features to a specific problem or domain.

The primary reasons to use fine-tuning are:

Transfer of Pre-trained Knowledge: Pre-trained models have learned useful features from extensive datasets, enabling them to capture high-level patterns. Fine-tuning leverages this knowledge for your specific task, potentially leading to faster convergence and better performance.

Limited Data Availability: In many real-world scenarios, obtaining a large dataset for training from scratch might be impractical. Fine-tuning enables you to achieve good results even with limited labeled data.

Time and Resource Efficiency: Training models from scratch can be time-consuming and resource-intensive. Fine-tuning helps you capitalize on existing model architectures and weights, reducing training time and computational resources.

Domain Adaptation: Fine-tuning allows you to adapt a model trained on one domain to work effectively in a related but different domain. This is particularly useful when transferring knowledge across similar tasks.

Task-specific Adaptation: You can adjust the pre-trained model's architecture to suit your specific task. By adding or modifying layers, you customize the model's behavior to match the requirements of your problem.

Regularization and Generalization: Fine-tuning with regularization techniques, like dropout or L2 regularization, can enhance the model's ability to generalize to new, unseen data and prevent overfitting.

In summary, fine-tuning provides an efficient way to tailor pre-trained models to your specific tasks, leveraging their learned features and architecture to achieve better results with limited resources and data.

How to do fine tuning -

Select a Pre-trained Model: Choose a pre-trained model that is relevant to your task and dataset. Pre-trained models, such as those based on BERT, GPT, ResNet, are trained on large datasets & have learned useful features that can be used for your task.

Prepare the Data: Format and preprocess your data in a way that is compatible with the pre-trained model. This might involve tokenization, data augmentation, or other preprocessing steps.

Modify the Model Architecture: Depending on your task, you may need to modify the architecture of the pre-trained model. For example, you might add task-specific layers on top of the pre-trained layers to adapt it to your task.

Define Loss and Metrics: Choose an appropriate loss function and evaluation metrics for your task. These will guide the optimization process during fine-tuning.

Fine-tune the Model: Train the modified model on your task-specific dataset. You'll typically use a smaller learning rate than during pre-training to avoid overwriting the pre-trained knowledge too quickly. You might also freeze some layers of the pre-trained model.

Read complete post -

Complete post on Fine tuning ML models

Keep Reading as we explore some of the best AI/ML Research Papers through this series.

Tweets that can help you understand complex topics of ML/DL —

Transformer Attention Mechanism explained in simple terms and how to use it ( with code).

Feature Crosses in ML - Explained in simple terms and how to use it ( with code).

Build Linear Neural Network for Regression - explained in simple terms with code implementation

How to build ML pipelines - explained in simple terms with code implementation

How to build Transformer model from scratch - explained in simple terms with code implementation

Ordinal encoding and One Hot Encoding explained in simple words and how to use it (with code).

Dropout Regularization technique explained in simple terms and how to use it ( with code).

53 Implemented Projects

Complete Data Scientist:

Link to the course : https://bit.ly/3wiIo8u

Run data pipelines efficiently to extract, transform, and load data.

Design and conduct experiments to uncover valuable insights and drive data-based decision making.

Build powerful recommendation systems that personalize user experiences and increase engagement.

Deploy solutions to the cloud, enabling scalable and accessible data applications.

Master the essential skills needed to excel in the field of data science.

Complete Data Engineering:

Link to the course : https://bit.ly/3A9oVs5

Learn the art of designing effective data models that ensure data integrity and efficient storage.

Construct data warehouses and data lakes to enable centralized and organized data storage.

Automate data pipelines to streamline data processing and ensure timely and accurate data availability.

Gain expertise in handling and working with massive datasets efficiently.

Become a proficient data engineer equipped with in-demand skills for building robust data infrastructures.

Complete Machine Learning Engineer:

Link to the course : https://bit.ly/3Tir8ub

Acquire advanced machine learning techniques and algorithms to solve complex problems.

Develop skills in packaging and deploying machine learning models to production environments.

Master the art of model evaluation and selection to ensure optimal performance.

Learn to implement cutting-edge algorithms and architectures for superior machine learning results.

Equip yourself with the skills required to excel as a machine learning engineer.

Complete Natural Language Processing:

Link to the course : https://bit.ly/3T7J8qY

Build models that analyze and interpret human language, enabling sentiment analysis and machine translation.

Gain hands-on experience working with real data, developing practical NLP applications.

Uncover hidden patterns and extract valuable insights from textual data.

Understand the complexities of language and apply NLP techniques to solve challenging problems.

Unlock the power of language through the application of natural language processing.

Complete Deep Learning:

Link to the course : https://bit.ly/3T5ppIo

Master the implementation of Neural Networks using the powerful PyTorch framework.

Dive deep into the world of deep learning and understand its applications in various domains.

Gain hands-on experience in developing solutions for image recognition, natural language understanding, and more.

Learn about different architectures and techniques for building robust deep learning models.

Become proficient in utilizing deep learning to tackle complex problems and drive innovation.

Complete Data Product Manager:

Link to the course : https://bit.ly/3QGUtwi

Leverage the power of data to build products that deliver personalized and impactful experiences.

Lead the development of data-driven products that position businesses ahead of the competition.

Gain a deep understanding of user behavior and preferences through data analysis.

Develop strategies to optimize product performance and drive business growth.

Become a data product manager who can harness data's potential to create successful products.

Part 1 of this can be found here - Link

Github for System Design Interviews with Case Studies

105+ System Design Case Studies

More system design case studies coming soon! Follow - Link

Things you must know in System Design -

System design basics : https://bit.ly/3SuUR0Y

Horizontal and vertical scaling : https://bit.ly/3slq5xh

Load balancing and Message queues: https://bit.ly/3sp0FP4

High level design and low level design, Consistent Hashing, Monolithic and Microservices architecture : https://bit.ly/3DnEfEm

Caching, Indexing, Proxies : https://bit.ly/3SvyVDc

Networking, How Browsers work, Content Network Delivery ( CDN) : https://bit.ly/3TOHQRb

Database Sharding, CAP Theorem, Database schema Design : https://bit.ly/3CZtfLN

Concurrency, API, Components + OOP + Abstraction : https://bit.ly/3sqQrhj

Estimation and Planning, Performance : https://bit.ly/3z9dSPN

Map Reduce, Patterns and Microservices : https://bit.ly/3zcsfmv

SQL vs NoSQL and Cloud : https://bit.ly/3z8Aa49