Activation functions play a crucial role in neural networks, which are the building blocks of deep learning models.

1/ Imagine a magical machine that learns about animal pictures. This machine is like a puzzle, needing special buttons to work well. Activation functions are these buttons, helping the machine understand pictures differently – like seeing size, brightness, and importance.

2/ Pictures can be tricky, so these buttons act like special glasses, letting the machine see clearly. Activation functions are magic buttons that help the machine understand pictures by looking in various ways, similar to using different tools for a puzzle's different parts.

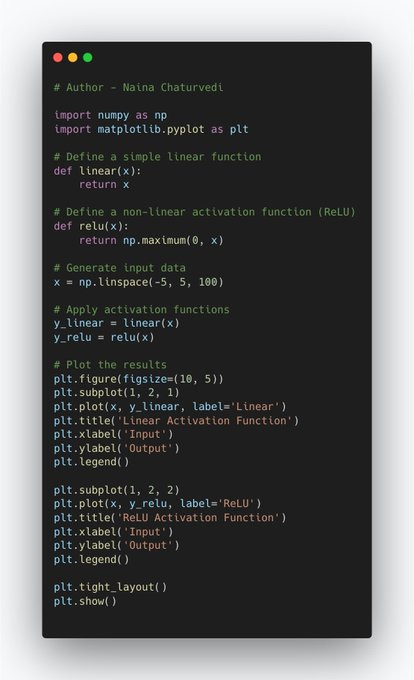

3/ An activation function is a mathematical function applied to the output of a neuron in an artificial neural network. Neurons take in inputs, multiply them by weights, sum them up, and then pass the result through an activation function.

Activation functions are an essential part of neural networks that help them understand and learn from data. They play a critical role in introducing non-linearity to the network, which is crucial for the network's ability to learn complex relationships and patterns.

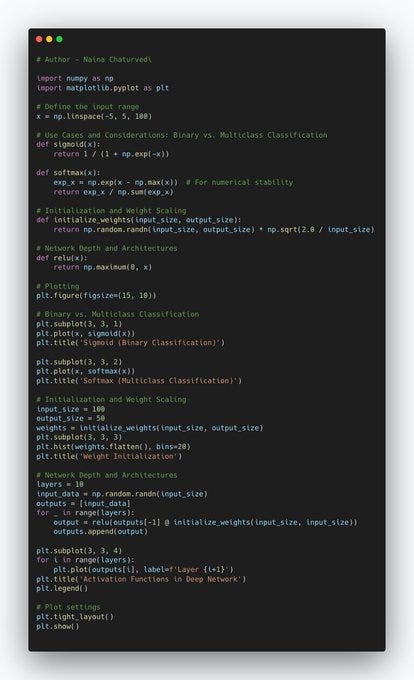

5/ Hidden Layers: In hidden layers of a neural network, activation functions help introduce non-linearity to the model. Without them, network would be limited to learning only linear transformations of input data. Non-linearity allows the network to capture intricate patterns.

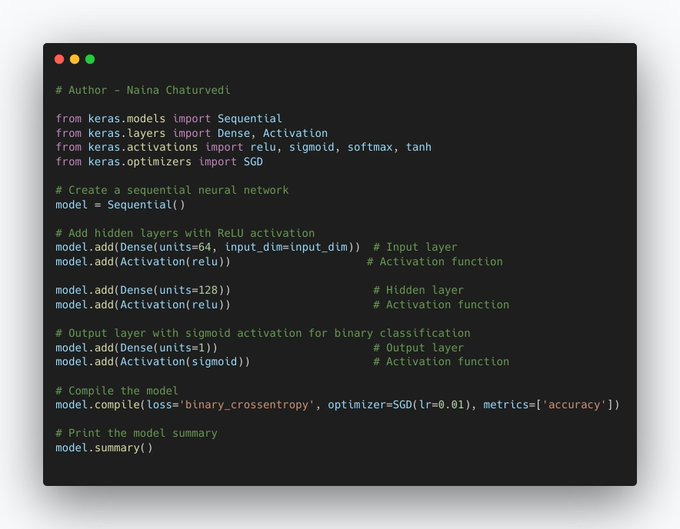

6/ Output Layer: The choice of activation function in the output layer depends on the nature of the task. For example, in binary classification tasks, the sigmoid activation function is often used to squash the output into a range between 0 and 1, representing a probability.

7/ Vanishing Gradient Problem: Activation functions can also help mitigate vanishing gradient problem, which can slow down training. Activation functions like ReLU and its variants maintain larger gradients for positive inputs, which prevents gradients from becoming very small.

8/ Feature Extraction: Activation functions allow neural networks to extract important features from the input data. Different activation functions emphasize different aspects of the data. For instance, ReLU focuses on positive values, which helps the network pick up on features

9/ Normalization and Transformation: Activation functions like the Tanh function, can normalize and transform data, making it suitable for certain tasks or improving convergence during training.

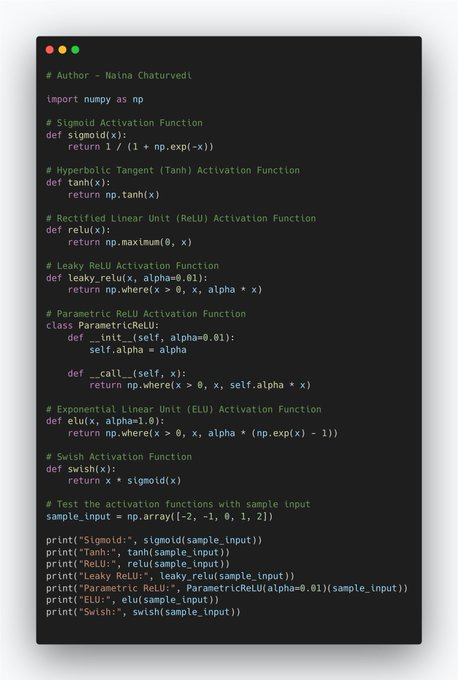

10/ Sigmoid: Range and Historical Significance: The sigmoid activation function squashes input values to a range between 0 and 1. It was historically used as a popular activation function, in neural networks, due to its nice properties like smoothness and interpretable outputs.

11/ Vanishing Gradient Problem: Sigmoid's saturating nature causes vanishing gradient problem. As inputs move towards the extremes (very high or very low values), the gradient becomes very small. This leads to slow learning and can cause the network to get stuck during training.

12/ Hyperbolic Tangent (Tanh): Range and Similarities with Sigmoid: The tanh activation function also squashes input values, but this time to a range between -1 and 1. Tanh is similar to sigmoid in shape but is zero-centered, meaning its average output is closer to zero.

13/ Mitigating Vanishing Gradient: While tanh helps mitigate the vanishing gradient problem compared to sigmoid due to its zero-centered nature, it can still suffer from similar issues when inputs are far from zero.

14/ Rectified Linear Unit (ReLU): Benefits: ReLU is a very simple activation function that outputs the input if it's positive and zero otherwise. Its simple computation speeds up training since it avoids complex exponentials or logarithms.

15/ Dying ReLU and Solutions: The dying ReLU problem occurs when the output is always zero, making the neuron ineffective during training. Leaky ReLU and Parametric ReLU are solutions that allow a small gradient to flow even when the unit is not active, preventing dying ReLU.

16/ Leaky ReLU and Parametric ReLU: Allowing Small Gradient: Leaky ReLU and Parametric ReLU are variants of ReLU that allow a small gradient (usually a small fraction of the input) to pass through when the unit is not active.

17/ Adaptability of Parametric ReLU: Parametric ReLU takes this a step further by learning the slope of the function for negative inputs. This adaptability helps the network learn better representations.

18/ Exponential Linear Unit (ELU): Ability to Handle Negative Inputs: ELU is another solution to the dying ReLU problem. It has a smooth transition for negative inputs, which helps avoid dead neurons. It also has non-zero gradients for all inputs, making learning more stable.

19/ Swish: Self-Gated Activation: Swish is a relatively newer activation function that uses a self-gating mechanism. It's similar to ReLU but has a sigmoid-like component that can adjust the output smoothly.

20/ Performance: Swish often performs better than ReLU in certain scenarios, although it might not always be the best choice. It combines some benefits of ReLU and sigmoid-like functions.

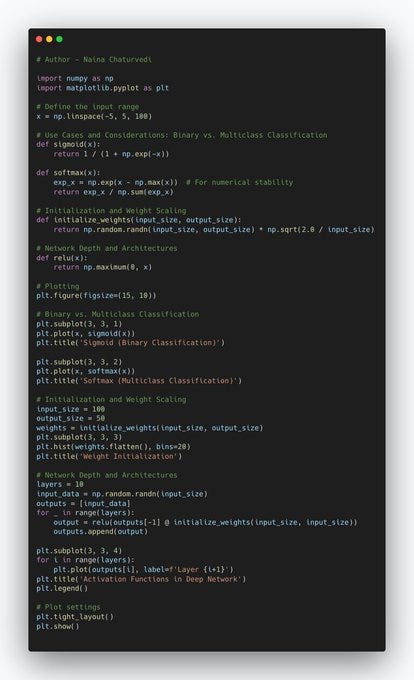

21/ Binary Classification: In binary classification, the task is to categorize inputs into two classes. Activation functions like the sigmoid function are commonly used in the output layer. Sigmoid squashes the output between 0 and 1.

22/ Multiclass Classification: For tasks with multiple classes, activation functions like the softmax function are more suitable in the output layer. Softmax normalizes the outputs into a probability distribution over all classes.

23/Initialization and Weight Scaling: Importance of Weight Initialization: Weight initialization is crucial because it sets the starting point for the learning process. Poor initialization can lead to issues like vanishing or exploding gradients.

24/ Gradient Vanishing/Exploding: During backpropagation, gradients can become extremely small (vanishing) or extremely large (exploding), which affects how the network updates its weights.

25/ Importance of Trying Multiple Activation Functions: Performance Variation: Different activation functions can perform differently on various tasks and datasets. What works well for one problem might not work as effectively for another.

26/ Avoiding Local Minima: Neural networks can sometimes get stuck in local minima during training. Trying various activation functions and architectures increases the chances of finding a configuration that helps the network escape such minima and achieve better results.

27/ Exploring Network Complexity: Different architectures involve varying levels of complexity and computational cost. By trying different architectures, you can strike a balance between model complexity and performance.

28/ Which Activation Function to choose and when - Classification vs. Regression: The nature of problem plays a role. For classification tasks, activation functions like ReLU, sigmoid, or softmax in o/p layer are common. For regression tasks, linear activation or tanh.

29/ Data Characteristics: Data characteristics, such as scale and distribution, matter. Activation functions like ReLU and its variants are well-suited for sparse and large-scale data, while tanh and sigmoid might be better for normalized data.

30/ Non-Linearity Requirement: If your problem involves complex relationships, non-linear activation functions like ReLU, tanh, or sigmoid are essential. If the problem is relatively linear, linear activation might suffice.

31/ Vanishing Gradient Concerns: If you're working with deep networks, using activation functions that mitigate vanishing gradients, like ReLU and its variants, can be beneficial.

Must know concepts before you dive in the research papers ( Explained) —

Transformer Link

TransformerXL Link

VGG Link

Mask RCNN Link

Masked Autoencoder Link

BEiT Link

BERT Link

ColD Fusion Link

ConvMixer Link

Deep and Cross Network Link

DenseNet Link

DistilBERT Link

DiT Link

DocFormer Link

Donut Link

EfficientNet Link

ELMo Link

Entity Embeddings Link

ERNIE-Layout Link

FastBERT Link

Fast RCNN Link

Faster RCNN Link

MobileBERT Link

MobileNetV1 Link

MobileNetV2 Link

MobileNetV3 Link

RCNN Link

ResNet Link

ResNext Link

SentenceBERT Link

Single Shot MultiBox Detector (SSD) Link

StructuralLM Link

Swin Transformer Link

TableNet Link

TabTransformer Link

Tabular ResNet Link

TinyBERT Link

Vision Transformer Link

Wide and Deep Learning Link

Xception Link

XLNet Link

AlexNet Link

BART Link

InceptionNetV2 and InceptionNetV3 Link

InceptionNetV4 and InceptionResNet Link

Layout LM Link

Layout LM v2 Link

Layout LM v3 Link

Lenet Link

LiLT Link

Feature Pyramid Network Link

Feature Tokenizer Transformer Link

Focal Loss (RetinaNet) Link

Projects

Implemented Data Science and ML projects : https://bit.ly/3H0ufl7

Implemented Data Analytics projects : https://bit.ly/3QSy2p2

Complete ML Research Papers Summarized : https://bit.ly/3QVb5kY

Implemented Deep Learning Projects : https://bit.ly/3GQ56Ju

Implemented Machine Learning Ops Projects : https://bit.ly/3HkVYy1

Implemented Time Series Analysis and Forecasting Projects : https://bit.ly/3HhJ82D

Implemented Applied Machine Learning Projects : https://bit.ly/3GX1SEm

Implemented Tensorflow and Keras Projects : https://bit.ly/3JmGw6f

Implemented PyTorch Projects : https://bit.ly/3WuwbYu

Implemented Scikit Learn Projects : https://bit.ly/3Wn9KV7

Implemented Big Data Projects : https://bit.ly/3kwF7zv

Implemented Cloud Machine Learning Projects : https://bit.ly/3ktKaka

Implemented Neural Networks Projects : https://bit.ly/3J4Js71

Implemented OpenCV Projects : https://bit.ly/3iNKcmF

Implemented Data Visualization Projects : https://bit.ly/3XDpm8a

Implemented Data Mining Projects : https://bit.ly/3kqViy5

Implemented Data Engineering Projects : https://bit.ly/3WHFXqF

Implemented Natural Leaning Processing Projects : https://bit.ly/3Hj1Is6